This week, the GPU Technology Conference (GTC) takes place. One of the big highlights was Nividia’s launch of Grace, which they describe as the next generation ARM-based computing platform designed specifically for large AI systems (Giant AI) and high-performance computing (HPC) applications. The processor (CPU) is named after Grace Hopper, a computer scientist who was a pioneer in programming languages in the 1950s.

The development of AI models is happening quickly, and the amount of data the models are processing are growing exponentially. Today’s largest models contain several billion parameters, and they double in size on average every 2.5 months. Training them effectively requires a new type of processor that can be tightly connected to a graphics processor, GPU, to eliminate bottlenecks in the system. Grace is therefore designed with applications with a large amount of data in mind, such as AI.

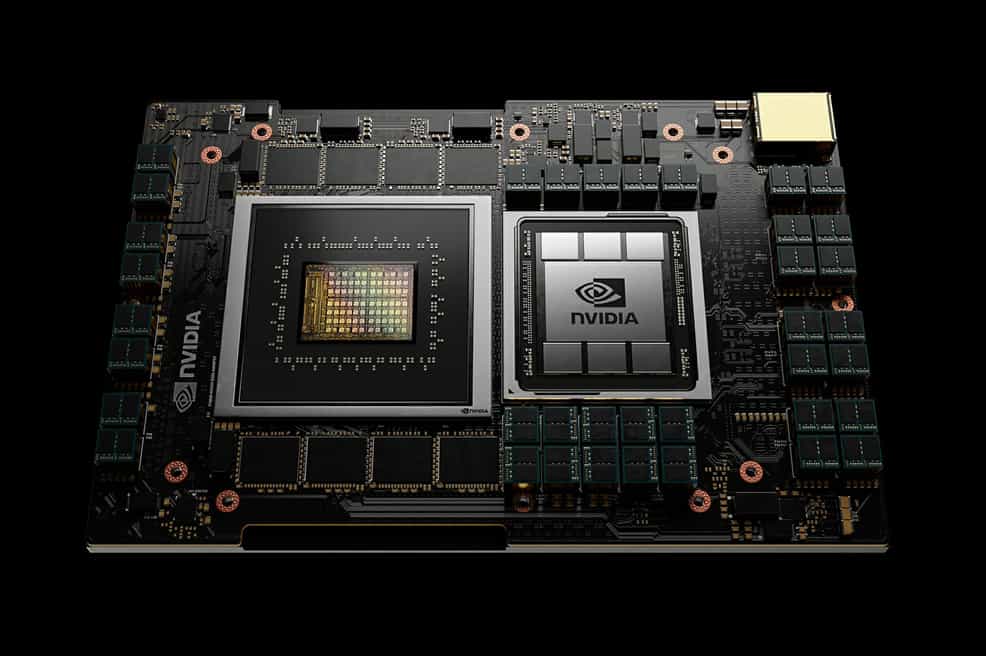

The circuit is ARM-based, rather than x86-based, and contains next-generation “Neoverse” processor cores. The new architecture enables significantly higher capacity than before, Nvidia itself states ten times faster. Delivery is expected in 2023. The same year a 20 exaflop AI supercomputer, based on Grace technology, is planned to be released. This machine, called the Alps, is a collaboration between the Swiss supercomputer center and the US Department of Energy Los Alamos National Laboratory.

The system will be used for climate and weather simulations, as well as research on astrophysics, molecular dynamics, quantum physics for Large Hadron Collider, and more. Alps will also be able to train GPT-3, one of the world’s largest models for processing natural languages, in just two days. It is almost seven times faster than Nvidia Selene, which is currently recognized as the world’s leading AI specialist supercomputer.