Deepfakes are fake videos, photos and audio files that are very difficult to distinguish from the real goods. Of course, they can make the problem of misinformation worse than it already is. But Microsoft has built a tool to help us sift the chaff from the wheat.

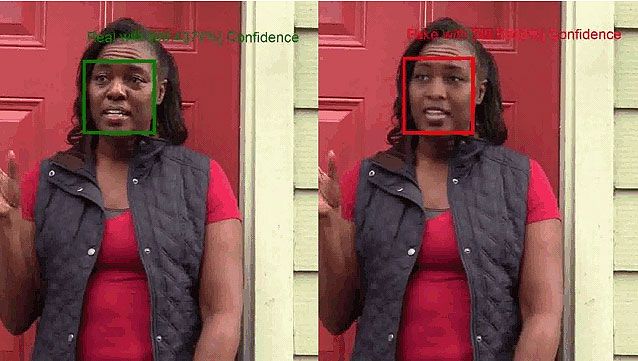

Microsoft Video Authenticator uses AI to determine the risk of a video being tampered with. The tool shows in real time how likely it is that someone has manipulated a frame in the movie. So even if only a very small part of the video has been tampered with, Microsoft Video Authenticator can clearly indicate which part of the movie is untrustworthy.

To tackle the problem from the other side, Microsoft has also developed a technology where the creator of a video should be able to give it a “watermark”. If something changes in the file, it will be noticed immediately and browsers etc. can post a message that the movie has been forged.

For those who want to learn more about deepfakes, Microsoft has together with USA Today created a quiz. Here you can see how good you are at discovering deepfakes .