AI can be very helpful in healthcare, for example when it comes to quickly searching through large amounts of images of cell samples to see if there are signs of cancer in the images. But one problem is that the AI is not infallible so it is not possible to blindly trust AI results.

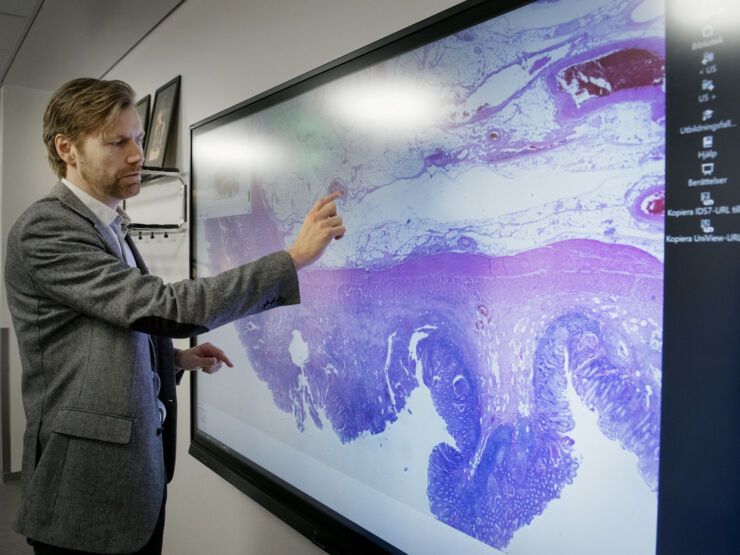

"We expect the AI to make mistakes. We know we can do it better over time by telling them when the AI is wrong and right. At the same time as looking at those aspects, you need to work on making the system efficient and effective to use. It is also important that users think that the machine learning component adds something positive", says Martin Lindvall, researcher at Linköping University, in a press release.

In his research, Martin Lindvall has investigated the problems that can arise when an AI has to look for cancer in images of tissue samples. This involves, for example, changing manufacturers of the chemicals used to dye the tissue sections, how thick the tissue sections are or if there is dust on the glass in the scanner. This can make the model that the AI has built up with machine learning no longer work.

"Exactly these factors are known now and AI developers control for them. But we can not rule out that more such disturbing factors will be discovered in the future. That is why they want a barrier that also prevents factors that we do not yet know about", says Martin Lindvall.

The problems make it difficult for doctors and patients to trust the AI's results. But by letting the AI work with a human pathologist, we can get the best of both worlds. The AI can very quickly go through large amounts of samples and help the pathologist to see more quickly what is important in the image.

The AI then points out the areas in the image it thinks look suspicious. If the doctor does not see anything in those areas, the sample is considered cancer-free. The goal is for the doctor to feel confident with the result, for it to go faster and for there to be no wrong decisions.

"Most users start in the same way. They look at what the AI is proposing, but ignore it. Over time, they build a sense of security and use the AI more. So having such a user interaction in the system is partly a security barrier, partly it makes the pathologist feel safe. The user is in the driver's seat compared to more autonomous AI products", says Martin Lindvall.

Six pathologists have tested the AI and the result was that they could work faster. The AI also gets better with time because the doctors constantly tell if the AI's proposal is judged to be right or wrong.